In September 2020, the UK introduced the “Age-Appropriate Design Code,” which primarily focuses on protecting children online.

It’s with good reason. Targeted advertisements, customized suggestions, and an endless list of things for you to binge on—tech companies know what you want and what you’ll click on. And everyone is on the receiving end of these strategies. Even teens, tweens, and toddlers.

Years of psychological research have told us that what may be acceptable for adults may not be so great for kids’ psychological, mental, and emotional being. But unless these things are regulated, companies will keep using the same tactics for all age groups. Why? Because, in the end, generating revenue is the ultimate goal—one way or the other.

And that’s what the UK is trying to fix with their document, “Age-appropriate design: a code of practice for online services,” which primarily focuses on protecting children online.

Kidron’s InRealLife: It All Started Here

Former film director and producer Beeban Kidron, who worked on films like Bridget Jones: The Edge of Reason and Cinderella, is now working as a crossbench Peer in the UK House of Lords and the chair of the 5Rights Foundation.

In 2013, Kidron produced and directed a documentary entitled “InRealLife,” which focuses on understanding what exactly the internet is and what it’s doing to our children.

This film takes us on an eye-opening journey from British teens’ bedrooms to Silicon Valley. The filmmaker suggested that while the internet’s original purpose was to promote free and open connectivity, it’s increasingly ensnaring young people in a heavily commercial world.

Glittering and beguiling on the outside, the modern-day internet can be heavily addictive and isolating.

Quietly building its case, InRealLife asks if we as parents can afford to stand by while our children’s focus, attention, and time are being outsourced to the internet or the people who control it.

Beeban Kidron Vs. Silicon Valley

Almost a decade after InRealLife was released, Kidron has managed to push through a Children’s Code in hopes of changing this landscape forever. This code is an amendment to the Data Protection Act (2018), enacted in September 2021.

The Children’s Code requires online services to “put the best interests of the child first” in their design for apps, websites, games, and internet-connected toys that are likely to be used by kids. This is a significant effort toward protecting children online and keeping them safe from many problems that tag along with modern technology.

The Children’s Code requires tech companies to adhere to 15 standards. And failure to do so will result in a fine of up to 4% of their global turnover. Let’s have a look at these 15 standards.

Age-Appropriate Design: A Code of Practice for Online Services

Today, big data resides at the heart of the digital services that children are using every day. From the moment they open an app, load a website, or play a game, these services start gathering data. These data points include:

- Who is using this service?

- How are they using it?

- Their usage frequency

- Their location

- The device they’re using these services from

- And more…

This information then goes to machine learning and artificial intelligence to form techniques that can persuade the user to spend more time on that service. They do this by showing content the user is most likely to engage with or displaying advertisements they’ll most likely click on.

The Information Commissioner’s Office (ICO) says that “for all the benefits the digital economy can offer children, we are not currently creating a safe space for them to learn, explore and play.”

And this statutory code of practice aims to change that—not by protecting children from the digital world but by protecting them within it. Have a look.

Best Interests of The Child

“In all actions concerning children, whether undertaken by public or private social welfare institutions, courts of law, administrative authorities or legislative bodies, the best interests of the child shall be a primary consideration.”

This is an excerpt from the United Nations Convention on the Rights of the Child (UNCRC), and it’s what the concept of the best interests of the child is based on.

But what does “best interests of the child” mean exactly?

It means that companies must take the UNCRC’s children’s rights and holistic development into account when designing a product or service.

You can learn more about it here.

Data Protection Impact Assessments

Data Protection Impact Assessments, or DPIA, is a defined process that helps companies identify and minimize the data protection risks of their services.

DPIA mitigates specific risks that may arise from processing children’s personal data.

The ICO requires companies to integrate DPIA in their base design, so it’s easier for them to scale their app, website, or service later.

Age-Appropriate Application

The age-appropriate application requires companies to tend to the different needs of children at different ages and stages of development.

This section of the code heavily leans towards giving children an appropriate level of protection on how the companies use their personal data.

The ICO, however, has given a certain degree of flexibility to companies to determine how to apply this standard in the circumstances and context of their online service.

Here’s what the ICO says:

“Take a risk-based approach to recognizing the age of individual users and ensure you effectively apply the standards in this code to child users. Either establish age with a level of certainty that is appropriate to the risks to the rights and freedoms of children that arise from your data processing, or apply the standards in this code to all your users instead.”

Transparency

This standard requires companies to be transparent, honest, and open about what users can expect when accessing their online services.

“The privacy information you provide to users, and other published terms, policies, and community standards, must be concise, prominent, and in clear language suited to the age of the child. Provide additional specific ‘bite-sized’ explanations about how you use personal data at the point that use is activated.”

Detrimental Use of Data

Detrimental use of data forbids companies from using children’s data in ways that may harm their wellbeing.

And they’ll also have to design their online service, website, apps, or games to make it easier for children to disengage without feeling pressured or disadvantaged.

The ICO says:

“Do not use children’s personal data in ways that have been shown to be detrimental to their wellbeing, or that go against industry codes of practice, other regulatory provisions, or Government advice.”

Policies And Community Standards

This standard simply requires companies to follow their own commitments.

If their policy makes a commitment saying that all content published on their site will be child-friendly, they need to ensure that it is. And the ICO also recommends that they set up their system to ensure this is the case.

There have been many incidents where companies have failed to comply with their own policies. Facebook, for example, assures that they don’t tolerate harmful content. But many people have complained that Facebook doesn’t remove a lot of harmful content even when there have been many user reports.

The ICO says:

“Uphold your own published terms, policies, and community standards (including but not limited to privacy policies, age restriction, behavior rules, and content policies).”

Default Settings

This standard gives users a choice over their privacy settings or, in simple terms, control over how companies use their personal data.

The ICO requires companies to set the privacy settings to maximum by default and give users the choice to reduce the intensity of their settings. Unless, of course, the company can provide a compelling reason for not keeping the setting at the max.

Data Minimization

Only collect the data you need to provide an efficient service, says the “Data Minimization” standard.

This actually comes from Article 25 of the General Data Protection Regulation, or the GDPR.

It says that personal data shall be “adequate, relevant and limited to what is necessary in relation to the purposes for which they are processed (‘data minimization’).”

They can do this by giving children separate choices over the elements they activate.

Data Sharing

“Do not disclose children’s data unless you can demonstrate a compelling reason to do so, taking account of the best interests of the child.”

This standard takes data sharing extremely seriously and forbids companies from disclosing children’s personal data to third parties outside their organization.

Third-party data sharing is essential because most companies engage with several parties to deliver their products and services.

And some third-party sharing isn’t strictly ethical or lawful. This standard aims to minimize that by asking companies to give a strong reason for why they’re sharing their user data with third parties.

Geolocation

Recital 38 to the GDPR states that:

“Children merit specific protection with regard to their personal data, as they may be less aware of the risks, consequences and safeguards concerned and their rights in relation to the processing….”

This standard is based on the same recital of the GDPR forbidding companies to use geolocation data unless they have a compelling reason to do so.

This is important because the ability to track a child’s physical location risks the child’s physical and mental safety.

In simple terms, if geolocation data isn’t private, it makes children vulnerable to risks like physical and mental abuse, abduction, sexual abuse, and trafficking.

The ICO says:

“Switch geolocation options off by default (unless you can demonstrate a compelling reason for geolocation to be switched on by default, taking account of the best interests of the child), and provide an obvious sign for children when location tracking is active. Options which make a child’s location visible to others should default back to ‘off’ at the end of each session.”

Parental Controls

Parental controls are popular tools that allow parents or guardians to set a limit on their child’s online activity, therefore mitigating risks that their child may be exposed to.

This includes things like:

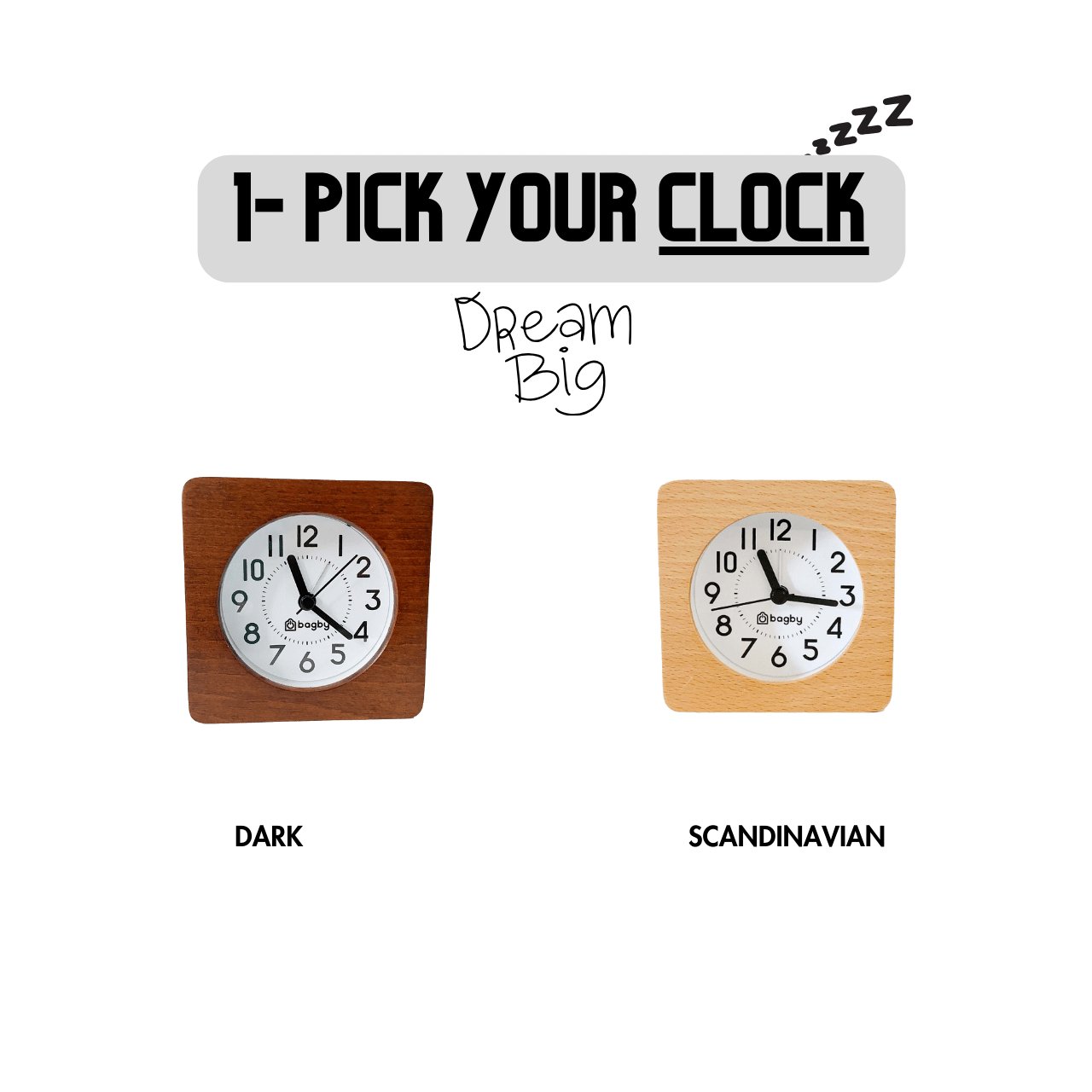

- Setting time limits or bedtimes

- Restricting internet access to pre-approved sites only

- And restricting in-app purchases.

Besides that, parents can also monitor a child’s online activity or track their physical location.

If a company provides parental controls in their service, this standard requires them to give the child age-appropriate information about this.

In simple terms, online services must provide an obvious sign to children when their parents monitor their use or track their location.

Profiling

Profiling by GDPR’s definition is:

“Any form of automated processing of personal data consisting of the use of personal data to evaluate certain aspects relating to a natural person, in particular, to analyze or predict aspects concerning that natural person’s performance at work, economic situation, health, personal preferences, interests, reliability, behavior, location or movements.”

This standard requires companies to turn off profiling by default unless they have a compelling reason for profiling to be on by default. And if they do, they need to ensure that they’re considering the best interests of the child.

Nudge Techniques

Nudge techniques are design features that encourage users to follow the designer’s preferred paths when they’re making a decision.

The image below shows that the ‘yes’ button is large and painted green, whereas the ‘no’ button has a small print and is painted grey.

According to the experts, nudge techniques are highly effective in making a decision for the user and psychologically compelling them to choose the option suitable for the company.

Another example of a nudge technique is when companies make one option much less cumbersome or time-consuming than the alternative. This, in most cases, encourages users to choose the low privacy option with one click rather than the high privacy alternative via a six-click mechanism.

This standard forbids companies from using “nudge techniques” with their younger users.

Connected Toys and Devices

Connected toys and devices are physical gadgets that connect to the internet. Some of these toys have cameras and microphones, and children often use them without adult supervision.

This opens up an opportunity for data misuse.

This ICO standard requires companies to follow GDPR Article 5(1), which says that companies must ensure that their data processing is lawful, fair, and transparent.

Online Tools

GDPR gives the users the following rights:

- The right to rectification

- The right to erasure

- The right to restrict processing

- The right to data portability

- The right to object

- And rights in relation to automated decision-making and profiling

This standard requires companies to provide prominent and accessible tools to help children exercise their data protection rights and report concerns.

Time-Frame to Comply

Although the ICO introduced the code in September 2020, they offered companies a 12-month transition period.

And tech giants also responded to this code appropriately. For example:

- Instagram now prevents adult accounts from messaging children if they don’t follow the adult. Besides that, they’ve also modified their app to set the account private for anyone under the age of 16 creating an account.

- TikTok also introduced a bedtime feature that pauses notifications for children aged 13-15 after 9 PM.

- YouTube turned off autopay for users aged 13-17

- And finally, Google blocked targeted advertising for children under the age of 18.

Not Just in the UK

Recently in May 2022, the California Age-Appropriate Design Code Act (ADCA) unanimously passed the California Assembly and moved to the Senate for consideration.

The ADCA is heavily inspired by the United Kingdom’s Age Appropriate Design Code (AADC). And it aims to regulate the processing, collection, storage, and transfer of children’s data.

Learn more about the ADCA on the Future of Privacy Forum website. And you can read the full version of the “Age-Appropriate Design Code” by visiting the Information Commissioner’s Office’s (ICO) website.

Final Thoughts

The law can only force companies to an extent. I’m not saying that codes like these don’t help—they do. But, it’s ultimately in your control to make technology safer for your children.

Research studies have repeatedly shown that prolonged tech use affects you physically, mentally, emotionally, and psychologically. And the risk is even higher with children.

But there are things you can do to mitigate these problems. You can learn more about them in my “Healthier Tech” blog.

I recommend you start with my “Effects of Technology Use on Your Child’s Psychological Development: The Good & The Bad” post.